In Alberta’s oil and gas industry, data is generated at the edge, not in the cloud.

Well pads, compressor stations, pipelines, pump jacks, and processing facilities continuously produce telemetry such as pressure, temperature, flow rate, vibration, and equipment health metrics. The real challenge is not collecting data — it is moving that data reliably and economically from the field into cloud systems that engineers, analysts, and leadership can trust.

As systems scale, direct device-to-cloud designs often break down.

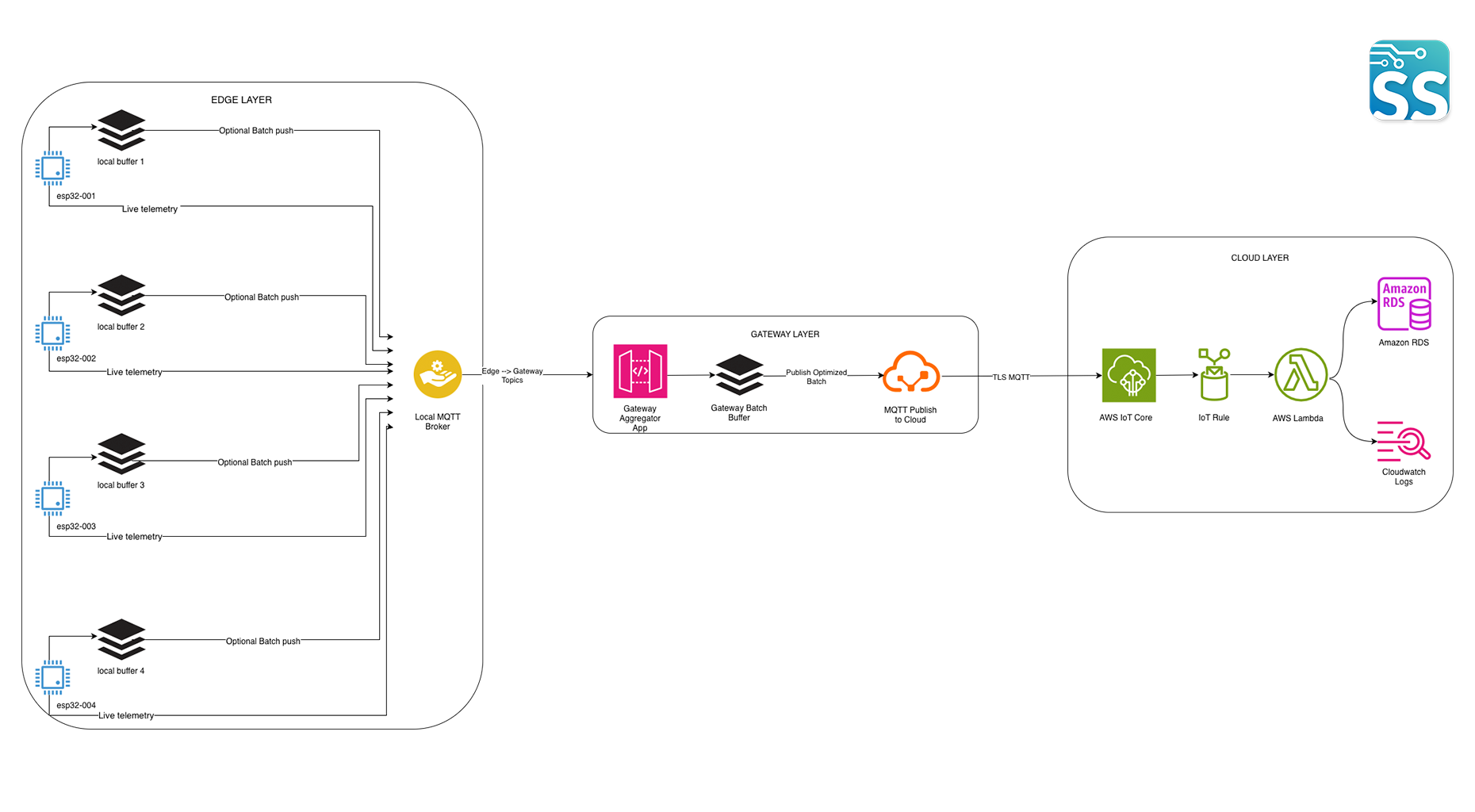

This architecture reflects a pattern widely used in production oil & gas IoT systems across North America.

This blog explains how a Gateway Aggregator + Batching → AWS IoT → Lambda → PostgreSQL architecture solves those problems — and why cloud and platform teams prefer it for large-scale industrial deployments.

🛢️ What Is This Architecture?

Gateway Aggregator + Batching Architecture

Each layer has a clear responsibility:

- Edge Devices (ESP32/ STM32/ Sensors): Collect telemetry and publish locally using MQTT.

- Local MQTT Broker: Provides reliable, low-latency communication inside the site or facility.

- Gateway Aggregator Application: Subscribes to all edge telemetry, aggregates messages, applies buffering and batching logic.

- AWS IoT Core: Secure cloud ingress point with authentication, authorization, and routing.

- AWS Lambda: Validates, transforms, and persists telemetry.

- PostgreSQL (RDS): Durable storage for analytics, reporting, dashboards, and compliance.

This architecture decouples field devices from the cloud, introducing a controlled gateway boundary between operational technology (OT) and IT systems.

❓ Why Not Send All Edge Devices Directly to the Cloud?

This is a common question — especially when teams are early in cloud adoption.

Direct device-to-cloud communication works for small deployments, but introduces serious challenges at scale:

- ❌ High cellular or satellite data costs

- ❌ Thousands of cloud identities and certificates to manage

- ❌ Database overload from high-frequency writes

- ❌ Poor resilience during network outages

- ❌ Tight coupling between firmware and cloud systems A gateway aggregator solves this by acting as a single, controlled cloud-facing entity.

🔁 Why Gateway Aggregation + Batching?

1️⃣ Network Efficiency

Instead of sending hundreds of small messages per second:

- The gateway batches telemetry into optimized payloads

- Cloud traffic becomes predictable and

This is critical in oil & gas environments using:

- Cellular

- LTE-M

- Satellite

- Remote radio links

2️⃣ Reliability During Network Outages

When the cloud link drops:

- Edge devices continue publishing locally

- Gateway buffers data on disk

- Once connectivity returns, batches are flushed safely.

This prevents data loss, which is essential for:

- Root cause analysis

- Production reporting

- Regulatory audits

3️⃣ Cloud Cost Control

Batching reduces:

- AWS IoT message volume

- Lambda invocation count

- Database write amplification

For long-running field assets, this translates to significant operational savings.

🔐 Security Boundary (Why Cloud Teams Like This)

From a cloud security perspective, this architecture is clean and defensible:

- Only the gateway has AWS credentials

- Edge devices never touch the public internet

- Certificates and IAM policies are limited to a single trust boundary

- Databases remain private inside a VPC

- No inbound firewall rules required

This dramatically simplifies:

- Threat modeling

- Incident response

- Certificate rotation

- Compliance reviews

🧠 Why Store Batched Telemetry in PostgreSQL?

PostgreSQL is a strong backend choice for industrial telemetry:

- Handles time-series workloads efficiently

- Supports JSONB for flexible sensor payloads

- Strong indexing and query performance

- Easy integration with analytics and BI tools

Common downstream use cases:

- Historical trend analysis

- Production optimization

- Alarm investigations

- Predictive maintenance -Regulatory and ESG reporting

Databases create long-term business value, not just real-time visibility.

🛢️ How This Architecture Helps Oil & Gas Businesses

Centralized Visibility

- Field assets are geographically distributed.

- Decision-making should not be.

- This design consolidates telemetry across sites into a single, reliable data source.

Reduced Downtime

With reliable historical data, teams can:

- Detect abnormal trends early

- Predict failures

- Schedule maintenance proactively

This reduces:

- Emergency call-outs

- Production losses

- Safety incidents

Scales from Pilot to Field Rollout

The same architecture supports:

- One test well

- Ten sites

- Hundreds of field assets

- No redesign required.

👷 Why Cloud & Platform Teams Prefer This Pattern

This architecture helps engineering leaders by:

- Clearly separating edge, gateway, and cloud responsibilities

- Reducing blast radius during failures

- Allowing independent scaling of each layer

- Simplifying ownership between firmware and cloud teams

- Avoiding custom backend services

This leads to:

- ✔ Faster onboarding

- ✔ Fewer production incidents

- ✔ Easier handoffs

- ✔ Cleaner long-term maintenance

🔧 How Does the Data Flow Work?

Step 1 — Edge Devices Publish Locally

Devices publish telemetry to structured topics:

site/{site_id}/devices/{device_id}/telemetry

Step 2 — Gateway Aggregates & Buffers

The gateway:

- Subscribes to all site topics

- Writes incoming data to a local batch buffer

- Groups messages by time window or size

Step 3 — Gateway Publishes Batched MQTT to AWS

Batches are published securely via TLS:

cloud/{site_id}/telemetry/batch

This reduces message volume and improves throughput.

Step 4 — AWS IoT Rule → Lambda

AWS IoT routes batch payloads to Lambda for:

- Validation

- Normalization

- Persistence

Step 5 — PostgreSQL Stores Telemetry

A typical schema supports:

- Fast time-range queries

- Flexible payloads

- Long-term retention

🧭 Where This Leads Next

Once this foundation is in place, teams can easily add:

- Real-time dashboards (React, Grafana)

- Reporting pipelines

- Machine learning workloads

- Predictive maintenance

- Digital twin systems This architecture is the backbone, not the final product.

🤝 Whom Can You Ask for Help?

If you’re planning or building:

- Gateway-based IoT systems

- Industrial edge-to-cloud pipelines

- Secure MQTT ingestion

- Cloud-backed telemetry platforms

You don’t need separate firmware, cloud, and data vendors. You need one architecture that connects them cleanly.

🚀 Quick Call-to-Action

Schedule Your Architecture Review

We’ll review your field setup, network constraints, and cloud goals — and map a gateway architecture that scales safely.

🚀 Conclusion

Gateway Aggregator + Batching is not an academic pattern — it is how real industrial IoT systems are built and operated. For oil and gas organizations, it delivers:

- Reliable telemetry ingestion

- Strong security boundaries

- Predictable cloud costs

- Scalable growth paths

If you’re building or modernizing industrial systems, this architecture delivers a proven, production-ready foundation for secure and scalable operations.

Learn more at Cloud Integration